Storage Glossary

19-inch rack

A standardized frame or enclosure of 19" width for mounting multiple electronic equipment modules stacked over another on two parallel vertical rails. The front plate of one module measures 19 inches (482.60mm) wide, thus the name. The width of the front plate is 19" (482.60mm) in total, including the screw terminal area, with 17.75" (450.85mm) clear area in-between vertical screwing rails (called "rack opening"). One height unit (a "rack unit", measured in "U", 1U, 2U, 3U, etc.) is 1.752" (44.50mm) high. 19" racks may be embodied as open frames, offering essentially vertical screwing rails only ("two-post racks", popular in telco applications) or as open or closed cabinets (usually 24" wide, 609.6mm), with optional additional internal width outside the rail area for more elaborate cabling. 19" rack modules may be short enclosures or very deep ("mounting depth"). Larger mounted enclosures, meaning with often added height and usually very deep, are commonly screwed to the front and rear posts, to properly support the heavy weight. When ball-bearing "Rack-rails" are used as underpinning in this four-post bolting, the whole enclosure can be pulled out like a drawer for easier access.

In some space constrained applications, it has become popular to use the half-width rack format, a 9.5" or 10-inch rack. In 19" racks, having two devices share an available height-unit's horizontal space is popular in audio applications and is gaining attention for computer installations in recent years. 10" racks usually are less deep, in comparison to common 19" server cabinets, following the overall smaller footprint. 10-inch rack dimensions are: exactly 10" (254.00mm) from side to side horizontally (front plate), including screwing area. Actual usable width is 8.75" (222.25mm) clear area in-between vertical screwing rails ("rack opening"). Both standards, 10" and 19" use rails of same width, 0.625" (15.875mm). Apart from saving space in commercial installations, 10-inch rack frames are deployed in military, in airborne or otherwise weight or space constrained scenarios. While dedicated 10-inch switches, 10-inch hubs and 10" computer enclosures are available, it is common in SoHo or business environments to install, not specifically 10", consumer routers or similarly small devices in 10-inch racks in a DIY fashion on 10-inch rack shelfs. The Help section has an indepth document on rack mount equipment.

3.5-Inch Floppy Disk

the third and last step in floppy disk formats, the 3.5 floppy disk marked pinnacle in widespread portable removable magnetic storage. Enjoying widest popularity throughout the 1990s, the smaller flexible disk inside a self-contained ridig plastic case was the go-to mobile storage medium of choice for many 16 bit home computer systems, business PCs and musical instruments. The 3.5" disk became the synonymon for "saving to disk" and is the common user-interface icon for saving data to non-volative storage to this day with.

4K video

(as a challenge, in a Video storage context) "4K" video refers to a video that possesses four times greater resolution than full High-Definition 1080 video. A higher resolution means that the video image is potentially sharper than at lower resolutions. In practice, there are two standard resolutions referred to as "4K video": 4096x2160 pixels for the film and video production industry and 3840x2160 pixels for television and monitors. While data consumption of video depends highly on used codec and bit-rate, one minute of 4K video can use up than a gigabyte of space. By estimate, an hour of 4K video takes approximately 45 GB of storage space. Handling 4K video requires underlying storage to provide sufficient IO, sustained read/high write speeds north of about 32MB per second / 200mbps.5.25-Inch Floppy Disk

being an intermediary step in floppy disk formats, the 5.25 floppy disk defined a whole era of computing, in business and in the home, as the widely used storage medium for home computers. Popular between 1980 and 1990, it was the go-to mobile storage medium of choice for the Commodore C64, IBM AT and XT computers, IBM compatibles and many more. The floppy disk is synonymous for "storing" and its shape is the user-interface icon for saving data to non-volative storage to this day. With Micropolis being an early manufacturer of 5.25-inch floppy disk drives, our knowledge base has an extensive article on 5.25" floppy disk variants.

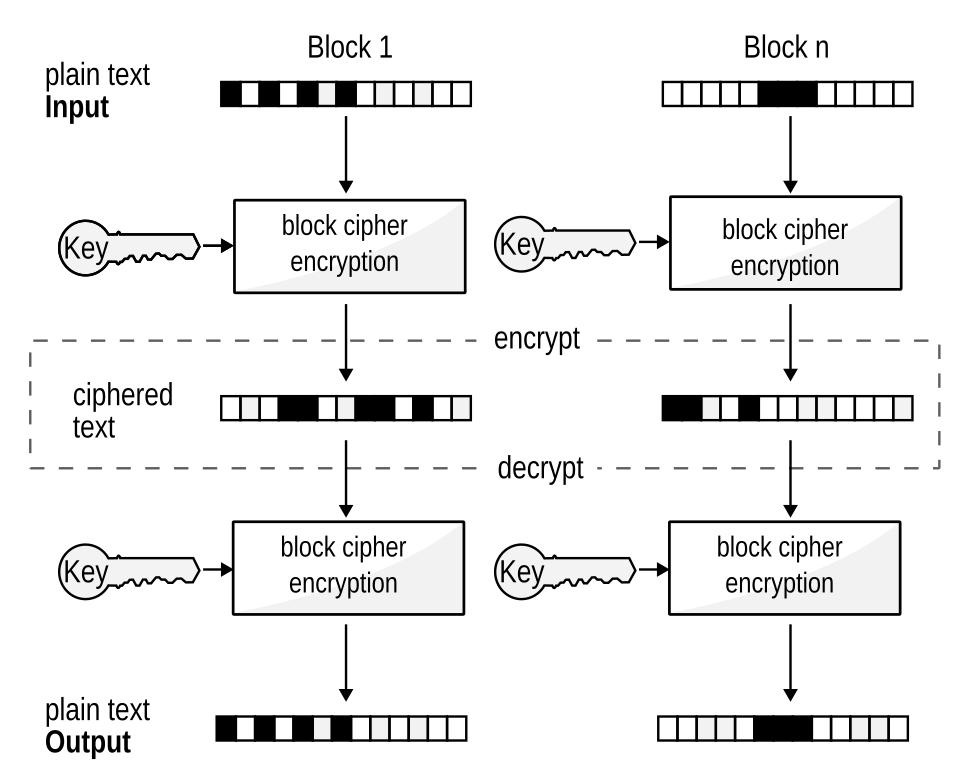

AES-256-CCM

AES is short for the symmetric encryption algorithm "Advanced Encryption Standard", also known as Rijndael, named after the Dutch inventor of this family of block ciphers. AES is one variant of a Rijndael cipher, "256" describing the used key length and 256 being a value selected during the standardization of ciphers by the US National Institute of Standards and Technology (NIST). AES-256 is used in encryption, authentication and data storage. The added "CCM" is the abbreviation of "Counter with Cipher block Chaining Mode - Message Authentication Code", or "Counter with CBC-MAC", or "CCM-mode". This operation mode adds integrity checking through CBC-MAC and is used by applications where a high-security level with strong encryption and authentication is needed.AHCI

Short for "Advanced Host Controller Interface". In comparison with NVMe, which is commonly used for solid-state storage devices, AHCI is more tuned towards use with spinning magnetic media, which is usually slower and has its own complications. As such, in solid-state contexts, AHCI has a number of performance drawbacks NVMe removes.Allocate-on-flush

also called "delayed allocation" is an optimization scheme found in some file-systems (e.g. XFS, ZFS, Reiser4). Data scheduled for write to storage isn't actually written immediately but held in memory until memory runs out or the 'sync' system call is issued. This helps reduce processing overhead and helps in mitigation of fragmentation inherent in block mapping data storage, as multiple smaller writes can be combined into faster and more aligned long sequential writes. This is especially useful in combination with a copy on write (CoW) scheme.ACID properties

is a common set of guarantees in information technology, usually found in database systems (DBMS) or distributed systems. ACID is short for "atomicity, consistency, isolation, durability", describing data validity expectations in the face of physical hardware failures, errors in software or a combination thereof.Adler-32

Adler-32 is Mark Adler's simple and efficient algorithm intended for checksum value calculation. It is used for error detection in data transmission or storage, where speed is more important than reliability.Air Gapping

From "air gap", also "air wall", is a security measure used in computer networking. The term means isolating a computer, group of computers, or an entire computer network from other, potentially less secured networks ("disconnected network"). In practice, this security measure can be achieved in two ways - by total physical isolation, where all physical and wireless connections are severed, or by logical isolation, using strict firewall rules, encryption, or network segmentation.Active Directory (short "AD")

Microsoft's Active Directory (AD) is a database and set of processes and services used by Windows Server operating systems for authorizing and authenticating all users and computers in a centralized Windows domain computer network. It was designed for network resources management and security enhancement. Active Directory stores all user accounts and groups, security policies, trust relationships, and domain controllers - used by an Enterprise. AD is orchestrated by a server running the "AD DS" (Active Directory Domain Service) role. In summary, AD defines who can access which object, or who can modify an object.AFP (Apple Filing Protocol)

The Apple Filing Protocol (AFP), originally "AppleTalk Filing protocol" is a discontinued network file sharing and control protocol developed by Apple and was originally designed for sharing files between Macintosh computer workstations and servers. It was commonly used for large file transferring, for example in video storage or editing environments. Files were served under a global namespace in URI form, prefixed with "afp://". It allowed servers and users to use various methods of user authentication, controlling access and prompting for a password input when a user tried to access a resource or volume for the first time. AFP was one part of the more general "Apple File Service" (AFS). AFP was officially discontinued by Apple with macOS Big Sur in 2020 in favor of SMB.ARIES

"ARIES" is short for "Algorithms for Recovery and Isolation Exploiting Semantics" is a logging and recovery algorithm family primarily used in Database Management Systems. One element of ARIES is "write-ahead logging" (WAL), meaning actions are first recorded to a stable log file and then executed."Aries" was also the model name of the Aries series of Micropolis hard-disk drives, featuring a SCSI2 interface and coming in a 3.5 inch slim-line form-factor.

Auto-Tiering

Storage can be described as being organized in layers, each layer representing a class (or tier) of storage, each class inheriting its own cost and performance characteristic. Often data is classified and allocated into these layers by how often it is accessed, how fast it has to be accessed or how valuable certain data is. The scheme by which data is assigned to specific layers is called tiering. Tiering may happen manually or automated. "Automated Storage Tiering" (AST) or "Auto-Tiering" denotes systems that are able to automatically allocate data to specific tiers, based on pre-defined rules, clever algorithms or artificial intelligence. One system offering this feature-set is IBM's "Hierarchical storage management" (HSM). Having data on different storage tiers is simply "Tiered Storage". Oftentimes, with cached data ("Data Cache"), data is moved between different caching tiers, e.g. from in-memory, to solid-state devices, and then to magnetic disk or magnetic tape (like LTO tapes) for long-term storage.ATA over Ethernet

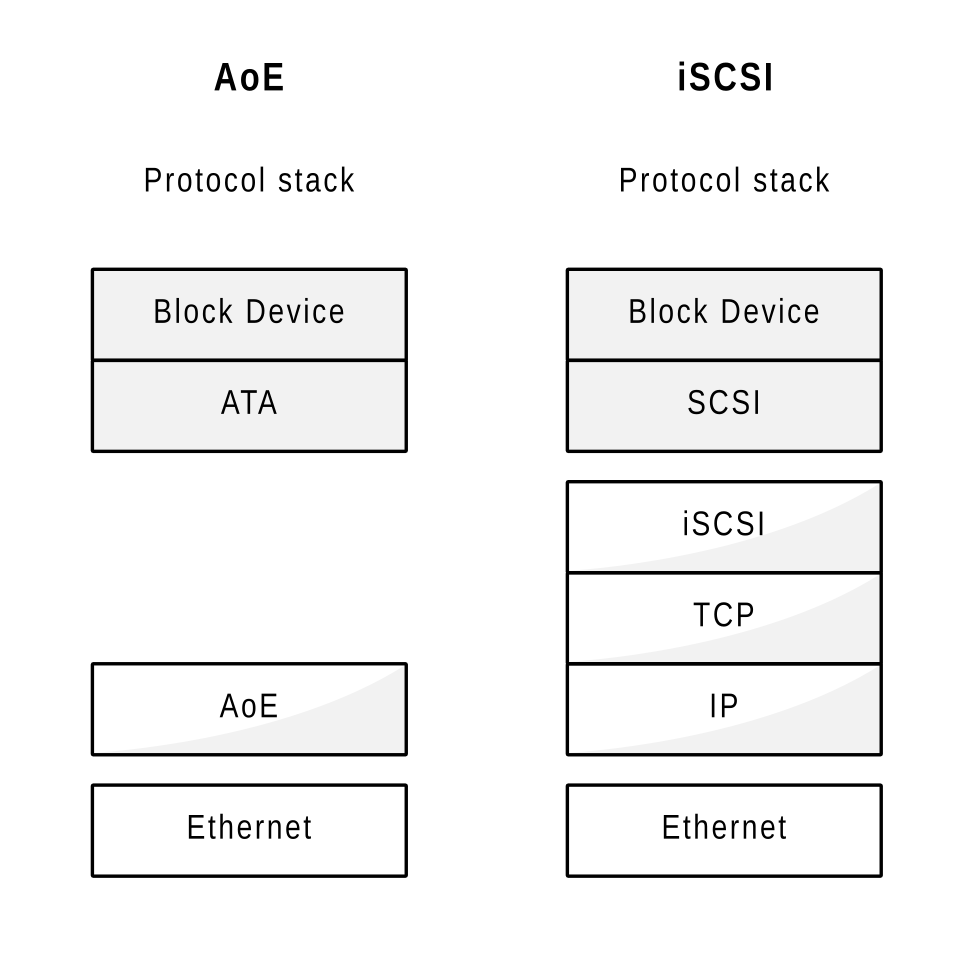

(mostly implemented by Coraid/SouthSuite, Inc.) ATA over Ethernet (AoE) is a network protocol designed to use the OSI model's data link layer for accessing block storage devices over Ethernet networks. It is used for transferring data without the use of the higher-level Transfer Control Protocol Internet Protocol (TCP/IP), lowering protocol overhead. Therefore, it is considered leaner and more suited for use in high-performance access to Storage Area Networks (SAN). Similarly as in iSCSI, the host system providing the actual physical data volumes is named the "AoE target" and the system that is accessing the volumes and moutning them is called "AoE initiator".

Authentication

Essentially authentication is proving the identity of something or someone. For example when someone logs on to a computer, the authentication scheme is expected to faithfully identify the person asking to be logged in actually is the person who owns a certain user account. Traditionally, this is done by the user providing a password, as key, along with the login/account name. As a password alone has been proven to be of limited security, more elaborate authentication schemes like Two-Factor Authentication (2FA) have been invented to ask for more out-of-band means of identification of a user.Bandwidth

(or "throughput", in storage) Bandwidth represents the rate at which data can be transferred between computer workstations and servers or between storage devices in a given time. It is used as a metric for determining the performance of storage systems, and expressed in bytes or bits per second. Note the difference in definition to the term "bandwidth" in signal processing, where it means the range between lowest and highest attainable frequency in a defined signal power envelope.BeeGFS

(short for "Bee Global File System", formerly known as "Fraunhofer Gesellschaft File System", "FhGFS") Parallel global file system (one single namespace), developed and optimized for high-performance computing (HPC). Wikipedia, Official Website.Bin Locking (in Video Editing Systems)

Bin Locking represents the concept or functionality designed for collaborative video media editing and is known from popular professional video edit suite Avid Media Composer. It prevents simultaneous video media editing from multiple users within the same video project, enabling multiple users to work in the same project without data corruption. Usually, only the first user is granted write permissions, with all subsequent users giving read access only. As such, Bin locking is an essential feature for professional video editing environments where a great number of team members work on the same video project.Bit rot

It represents the phenomenon that describes the process of gradual degradation or corruption of data in digital storage. As such, it is a colloquial term for "data degradation", decay or data rot in general and is possible on digital media, solid-state or rotating magnetic and optical media, but also on analog paper media like punching cards. Bit rot is not caused by physical damage or critical device failures but rather by accumulating non-critical failures over time in a data storage device or media. Bit rot mitigation requires schemes to detect changed data through integrity checking and possibly offering means of recovery through redundancy, error correction codes or self-repairing algorithms which move degraded data segments away from the source of degradation and to error free alternative storage areas or media.Block cipher

Deterministic algorithms used in cryptography, usually consisting of actually two algorithms, one for encryption and one for decryption. The cipher produces a defined output from a defined input plus key. As the decryption process is defined as being the inverse of the encryption, the deciphering step is expected to produce the original input.

Block Level

Block Level is the level of a storage system on which a file-system would usually be formatted on. It is an abstraction between physical storage and file-system. Being able to access unformatted space on storage media means having "access to the block level". This is the common understanding of "block level", meaning "block-level access" to a storage device. From the perspective of a traditional file system, the "block level" describes the most low-level handling form of data a file-system uses to store data on media (a "block", sometimes "physical record"; this scheme is called "block mapping"). When a file-system commits data to storage, it writes one or more of such blocks on a HDD (Hard Disk Drive) or SSD (Solid State Disk), depending on the predefined block-size and the amount of data to be written. One file may be spread over multiple blocks and the file-system keeps track of these blocks. The block concept provides efficient data access and improves performance because data operations are performed on entire blocks rather than individual bits or bytes. This approach to store data is one possible abstraction of the underlying hardware, and as available storage space is divided into fixed size blocks, produces inefficiency when blocks aren't fully used and/or fragmentation of space. There are various approaches to mitigate these inefficiencies, usually by lowering the waste of space inherent to the fixed sized block concept. Some filesystems employ "block suballocation" and/or "tail merging" (Btrfs). Others offer the ability to dynamically change the underlying block size (ZFS). The concept of using Extents instead of individually addressed blocks is another update of the block mapping scheme. Although the block level abstraction of data storage is usually handled by the file-system, some applications employ their own block mapping layer (their own file-system format) to optimize block I/O performance, most notably Database Management Systems (DBMS). DBMS access storage via "block I/O", and this "block storage" may be directly attached via protocols like SCSI or Fibre Channel or remotely attached via protocols acting over fabric / SAN (Storage Area Networks), like the TCP/IP based iSCSI (Internet Small Computer Systems Interface) or AoE (ATA over Ethernet) protocol. The other context where it is common to speak of "block storage" is in enterprise cloud storage. These use cases usually require whole system images to be handled, moved and stored. The reason being that supervising a multitude of systems, represented by their attached storage, abstracts away the fact that each system itself holds thousands of files. The term "Block Storage" in this regard is used to describe a (virtual) unit that resembles a hard-drive/ a file-system/ a volume, and is treated as a single "block" or "file" that can be stored, retrieved, mounted and unmounted as (virtual) local storage. As such, cloud block storage, colloquially used, doesn't necessarily mean that such "block storage" offers low-level "block I/O" access, as for example DBMS would use.Block Storage

Most storage devices operate by organizing available storage into defined sized chunks, so called "blocks". Thus, storage devices are called "block devices", "block storage devices", or simply "block storage". In enterprise IT and environments where physical systems are more and more virtualized, "Block Storage" has become a more general term, an umbrella term for cloud concepts, typically describing means to create and manage virtual volumes that emulate the behavior of a traditional block device. And "Block Storage" describes a perspective on storage volumes, workflows to handle such "blocks" of data, how infrastructure to facilitate large numbers of such "blocks" is setup on the backend, etc. In sum, "Block Storage" is an advanced and structured type of data storage architecture designed for enterprise environments where high performance, reliability, scalability and flexibility play a crucial role. It separates a host system and its traditional local storage and replaces local storage with virtualized storage "devices" operating like block storage devices (virtual "local block storage"). These "blocks" (meaning virtual block storage devices), treated as raw volumes, are comparable to physical hard drives, are commonly organized via unique identifiers (unique address) and can be moved between systems, can be cloned, archived, mounted and unmounted. In this way, a large amount of data can be efficiently processed. Block Storage is popular in Cloud Storage and Virtualization environments and is commonly used for storing data in Storage Area Networks (SANs). There are different ways of accessing storage blocks in a SAN, by iSCSI, Fibre Channel or FCoE protocols, each varying in complexity and/or protocol overhead. SANs are usually Distributed Block Storage systems where data blocks are spread over multiple physical servers and sometimes locations.BMC

abbreviation for "Baseboard Management Controller" (sometimes "Board Management Controller") is a small dedicated computer-system (a microcontroller) embedded into the mainboard (baseboard) of systems that are usually operated remotely, like racked server systems in datacenters or NAS systems in off-site locations. A BMC is the central hub for sensor data, system state, power cycling and other means to supervise and control the host system. A BMC can be part of the IPMI control stack and may communicate over IPMI protocols. Often BMC networking is sperate from host system networking, adding an additional layer of security by using a dedicated communication network or serial connection. The software (client) counterpart of the BMC is the "BMC Management Utility" (BMU), usually a command-line tool to communicate with a remote BMC. Some BMCs offer a web interface.Btrfs

Btrfs, abbreviated from B-tree file system, is an advanced file system designed by Chris Mason and used on various Linux operating system distributions. It provides some next-generation features like - Copy-on-Write (COW) snapshots (read-only file system copies from a specific time point), built-in volume management with support for software-based RAID (Redundant Array of Independent Disks), fault tolerance, self-repair with automatic detection of silent data corruptions, checksums for data and metadata, etc. Wikipedia, Official Website (documentation).Checksumming

Implementation of a scheme to calculate a small "checksum" from a block of data in order to detect errors or bit rot. Checksumming algorithms, present in file-systems and many areas of data storage, are selected based on their speed and reliability. Checksummed data may be the actual data payload of a file, but also a file's metadata.checksum algorithm

is a sequence of instructions used to generate a small block of data, called a "checksum", from a sequence of bytes, known as "bitstream". Checksum algorithms and their generated checksums are used to determine whether bitstreams in data I/O have changed. Some of the most popular checksum algorithms are Cyclic Redundancy Check (CRC), Adler-32, Message Digest Algorithm 5 (MD5), Secure Hash Algorithms (SHA), xxHash, Tiger, etc.Ceph (distributed parallel file system)

Ceph is an open-source parallel distributed file and storage system designed to manage and store vast amounts of data. Ceph is known for its scalable architecture, good performance, and reliability. It offers object, block, and file storage in one unified system wit no single point of failure. Ceph's main component - RADOS (Reliable Autonomic Distributed Object Store), provides an Object Gateway, RADOS Block Device (RGB), and Ceph File System (CephFS). Wikipedia, Official website.CIFS

Abbreviated from Common Internet File System, is an internet file-sharing protocol developed by Microsoft for providing access to files and printers between nodes on a network. It is a newer, enhanced dialect (a particular implementation/version) of the Server Message Block (SMB) protocol and was introduced with Windows 2000.Cloud Storage

Cloud Storage is a data storage service model where the data is transmitted, stored, and managed on remote storage systems maintained by a third-party service provider. This virtualized storage infrastructure provides accessible interfaces to users over the internet so they can access their data from any device with an internet connection. Cloud storage eliminates the need to purchase, manage, and maintain in-house storage infrastructure. As a broad term, cloud storage may describe end-user use cases or more professional enterprise storage solutions.Cloning

the process of cloning in data storage usually describes a method of creating an exact replica of an original dataset, a database, or a whole storage volume - based on the snapshot created at some point in time. Cloning is commonly used for data backup and recovery, migrating operating systems, digital forensics, or mass deployment purposes. This process also implies replicating boot records, settings, metadata, and file systems found on the particular original storage.Cluster

while Cluster is a broad term in computer science describing a number of interconnected systems, a cluster in data storage represents a group of storage devices connected together to form a unified storage in order to provide scalability, enhanced data availability and distribution, resilience, and higher performance. Storage clusters are commonly used in distributed file systems and cloud storage where the amount of data is constantly growing and clients handle large datasets.Clustered file system

A clustered file system is a type of file system that provides simultaneous data distribution and retrieval across multiple physical storage servers, while appearing as a single logical file system for access and management. There are two distinctive architectures of clustered file systems: 1) "Shared-disk file systems", examples include IBM's General Parallel File System (GPFS), Red Hat GFS2, BSD HAMMER2, Quantum's StorNext, VMware's VMFS, Sun/Oracle's QFS - and 2) "Distributed file systems", examples are Hadoop (HDFS), GlusterFS, CephFS, BeeGFS, Lustre, XtreemFS. In distributed file systems (also called "shared-nothing" file systems) - each server in the cluster has its own local storage, while in shared disk file systems - all servers in the cluster have direct access to a common set of shared storage devices.Cold Storage

Related to the distinction of "hot" and "cold" spares in RAID systems, and from common knowledge about the behavior of ice and frozen materials, the term "cold" usually describes data that is either seldomly accessed or difficult/slow to access (meaning it has to be "thawed", "unfrozen" prior to being available). Cold storage can mean that physical drives or tapes are physically detached from a computer and stored away. In larger storage or data center contexts, cold storage can mean a scheme where hard drives are spun down in place (put into controller or software initiated stand-by, or are physically disconnected from power supply) to lessen the effects of mechanical wear and/or save energy. Starting up (or reconnecting) a cold storage resource is usually much more time consuming than accessing a storage system characterized as being "hot". While cold data is often associated with data on less performant media (thus less expensive), cold data can just as well describe a (more expensive) backup solution or data vault that is more suitable, more secure and/or reliable for long-term storage, as is an important metric in archival work. Digital data storage in DNA ("DNA Storage", "DNA digital data storage") can be one (future) form of cold data (as of 2024), where binary information is encoded in synthetic DNA (synthetic Deoxyribonucleic acid, using nucleotides A G T C in sequences). There is a gradient between hot, nearline and cold data storage patterns, with varying degrees of what is regarded as nearline or cold storage data. Nearline and cold data can be stored in MAIDs (massive array of idle drives), optical jukeboxes or tape libraries.Coercivity

(also magnetic coercivity, coercive field or coercive force) is a measure of the ability of a ferromagnetic material to "remain magnetized". It is usually used in conjunction with magnetic media and describes the force a read/write-head has to exert to magnetize an area on the surface or a magnetic recording medium.Common Redundant Power Supply (CRPS)

CRPS is a backup power supply configuration with two or more power supply units working together - but only one being active at a time, and sharing a common interface and connector. Each unit must be capable of giving sufficient electrical power to the system. CRPS devices are commonly deployed in mission-critical environments - server rooms, data centers, and large network equipment environments.Content-addressable Storage

Content-addressable storage (CAS), sometimes "content-addressed storage" or "fixed-content storage" is a file storage paradigm, where content isn't stored by a given name under a given path but in reverse by its contents, usually by hashing the file contents with a defined cryptographic hash function and using the resulting string as the file's identifier. This approach is common in systems that strive to create a single global representation for a large corpus of files, like in public P2P (peer to peer) file (sharing) systems.Copy on Write

CoW is a resource management technique used in many areas of computer science. In relation to storage CoW usually refers to a scheme for light incremental variation-copies of original data. When a user accesses data, this data is a set of original data blocks. Once read data is modified and commuted back to storage, a system using CoW only writes the affected data blocks instead of unnecessarily duplicating the entire dataset or re-writing all original blocks. File systems like Btrfs and ZFS are know for offering this feature. As data is forked instead of replaced, CoW is a mild form of snapshotting or incremental backup. It depends on implementation if older revisions of a modified resource are exposed to the user. "Copy on Write" may also refer to a a data safety scheme where data is never overwritten but is always fully sent to storage first (generating new data blocks), and only after the storage backend has confirmed that data has been successfully committed to storage, the original file/allocated blocks of the original file are freed up.Converged Ethernet

Converged Ethernet (as part of the Data center bridging (DCB) initiative) is an improvement to the Ethernet protocol to improve its fitness for data center and enterprise use. Many Storage Area Network applications and the used protocols use UDP instead of a full TCP stack to improve performance. But Ethernet at its core is a "best effort" network, and UDP messages aren't guaranteed to arrive. Thus many SAN protocol implementations (e.g. RoCE in select versions) use some sort of mechanism to guarantee the arrival of messages as part of their protocol layer. Another approach now is to amend the underlying Ethernet layer in such a way that UDP messages are guaranteed to arrive. "Convergence Enhanced Ethernet" (or "Converged Enhanced Ethernet" (CEE)) is such an extension. But as these extensions are still in flux, it requires hardware equipment to be compatible.CSP (Cloud Service Provider)

is a type of IT business that offers and sells (mostly) cloud computing solutions to customers to enable or supplement IT services or solutions on their end. Solutions offered range from compute (CPU/GPU) over storage and more elaborate managed computing offerings. Cloud computing usually describes more low-level building-blocks of enterprise IT or branch out into the domain of Infrastructure-as-a-Service (IaaS) or Platform-as-a-Service (PaaS), in contrast to higher-level solutions being SaaS (Software as a Service), offerings from Application Service Providers (ASP). The category of general CSP is sometimes broadly described as offering a X-as-a-Service (XaaS) model, where X may refer to any of the named solutions.Cyclic Redundancy Check (CRC)

CRC is an "accidental error or change"-detection method (a checksum algorithm) employed in data transmission and storage to ensure data integrity. In data storage, a CRC value is a defined-size checksum calculated based on input data such as a file's payload data and is stored together with it on the storage device. Upon reading that specific data, the CRC value is recalculated and compared with the originally stored CRC value. If those values match, it indicates that the data has likely not been corrupted.Cylinder-Head-Sector

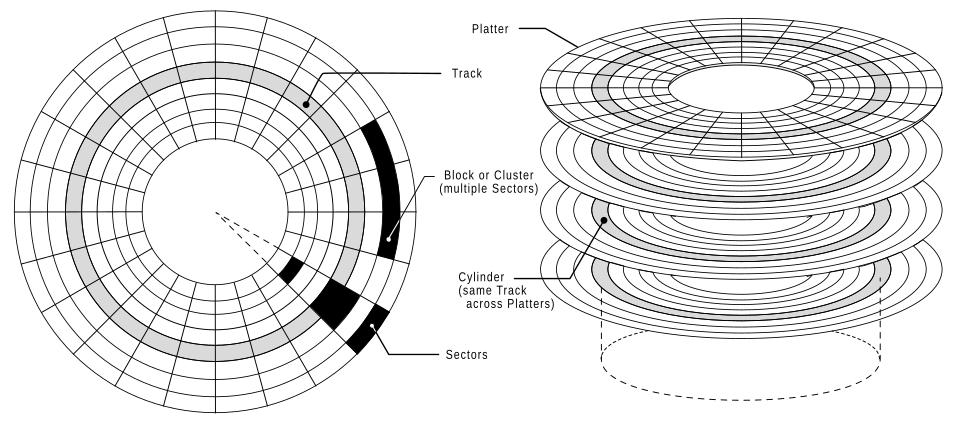

an earlier (obsolete) method of addressing physical blocks of data on a hard disk platter surface (CHS addressing).Data Corruption

Data Corruption is the process of unintended errors being introduced in original data during its transmission, reading, writing, storage, or processing - resulting in the data becoming unreadable, unusable, unreliable, or inaccessible. Many factors cause data corruption, for example - data transmission failures, hardware failures, human errors, malware, or software bugs. Accordingly, various mitigating measures can be deployed for prevention, such as - using Error-Correcting Code (ECC) mechanisms, antivirus software, redundant power supplies, regular backups, etc. One form of silent data corruption (data degradation) is bit rot.Data Lake

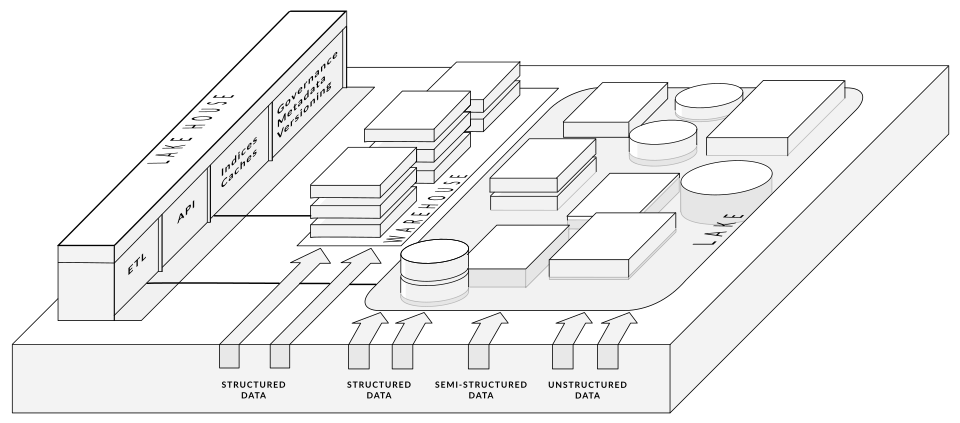

A Data Lake describes a central repository to store large amounts of unstructured data, data in its native (raw) format, using a flat architecture. Flat architecture hereby means data is not stored in a hierarchical structures or that any means were used to filter, pre-process or structure incoming data. All data is stored as is, lowering costs and handling overhead. This is the opposite of a data warehouse, where data is pre-processed and structured before being stored. While a data warehouse processes contents for simple access and analysis upon ingest, a data lake is expected to be processed and structured only when it is actually accessed. Data Warehouses are usually built in batches, with smaller amounts of structured data, while Data Lakes are expected to cope with batch imports and live streaming incoming data, like from IoT devices or the Web.Data Lakehouse

Combination of two principles in data management, "Data Lake" (flexible and cost efficient) and "Data Warehouse" (defined structures for organization and analysis of data), in a database-like structure. The Data Lakehouse approach tries to achieve the best of both worlds.

Deduplication

Deduplication is the process of recognizing and eliminating redundant data from a given dataset. The benefits of deduplication are a decrease in occupied storage, reduction of data operational overheads, optimization of free space on a volume, thus lowering overall storage costs. There are two deduplication methods - inline (redundancies are removed as the data is written to storage) and post-processing deduplication (redundancies are removed after the data is written to storage). There are also two types of data deduplication - "file-level deduplication" (comparing a file with copies already stored) and "block-level deduplication" (searching within a file's blocks and saving unique iterations of each block - if a file is altered, only changed data blocks are saved).Digital separation

in the context of the film industry (cinema motion pictures), "Digital separation" (also "Three-strip Color-separation Film-out Process", or "Three-Strip Separation") describes a photochemical film preservation technique where a color master is separated by color and then recorded on very high resolution highly stable analog black & white roll film. The process is essentially an inversion of what is used in CMYK color printing or the Technicolor three-strip RGB recording format - where components of the color spectrum are recorded individually and are later re-assembled to form the full-color projection image. The guiding idea is that black & white film, similarly as with Microfilm content preservation, is very stable and will remain unchanged over decades. This technique, although financially expensive and technically complex, is a valid option for large motion picture studios to preserve their moving image output. In today's all-digital movie-making workflows, where image capture and projection is mostly fully digital, film preservation is still a challenge, due to data volume and the requirement to preserve digital content reliably. Thus, a film-out process, to an analog time-tested medium, is a valid alternative to LTO tape or similar long-term data archiving solutions.DMZ

Short for "Demilitarized Zone" is a term in networking architecture meaning ad additional layer or ring of security around a secured network. Between internal networks and external networks, like the Internet, the DMZ acts as an in-between area. Using two firewalls, one for traversal from the public Internet to the DMZ and another from the DMZ to a secured net, a potential attacker has to overcome two security perimeters for intrusion and makes a successful breach of network security less likely.Distributed file system

One flavor of a "Clustered File System"Drive bay form-factors

On computer systems, hardware options (sometimes internal, sometimes user facing, and not limited to storage drives, but also media card readers, displays, etc.) are usually added in standardized drive bays, where the size of these drive bays evolved from early industry standards over subsequent formalization. Common sizes are 5.25 inch, 3.5-inch, 2.5-inch and 1.8-inch drive bays, where more recent popularity usually aligns with a smaller footprint. 8.0-inch drives emerged on early computers of the 1960s and 1970s, for floppy drives and hard disk drives. One example is the Micropolis 1203-1 rigid disk drive. The 5.25-inch drive bay enveloped followed and within its defined area, drives got smaller and so called "full-height" (abbreviated as "FH", or "FHT") drives appeared, as well as so called "half-height" (abbreviated as "HH", or "HHT") drives, occupying only half of the available height. When storage technology got smaller in size, the 3.5-inch, 2.5-inch and 1.8-inch drive bays became common, and similarly to the larger drive bays, various break-points of only utilizing half-height, half-length and combinations thereof. HHHL (half height, half length), FHHL (full height, half length), sometimes "SL" or "1/3HT", for slimmer drives than half-height 3.5-inch drives. You can find many of these abbreviations for drive-bay form-factors on the Micropolis Hard Disk Drive Support pages.

eCryptfs

"Enterprise cryptographic filesystem", abbreviated as eCryptfs, is a POSIX-compliant disk encryption software on Linux.Edge Computing

In complex networks and systems, response times, bandwidth or immediately available compute power are important metrics. Moving resources closer to clients (close to the "edge") improves or bolsters those metrics. As such, "edge computing" is a paradigm in network architecture, distributed systems and part of an overall optimization strategy.EncFS

is an open sourced cryptographic filesystem popular on Linux systems. It transparently encrypts files, using an arbitrary directory as storage for the encrypted files and presenting decrypted files via FUSE mounts.Edit while Capture

Depending on context, this may also be named "Edit while ingest". In media production workflows, editors are working on video material that came right in and has to be presented to viewers very quickly, for example, in news coverage. On the other hand, actual video material is oftentimes coming in via video (satellite) feeds, dedicated data link transfers or has to be copied from recording media. With large files or with video material being captured from a real-time playback, waiting for the ingest or capture to complete would create a large window of unused time, time that could be used to edit video material already. With file storage solutions tuned for such workflows (and video editing suites supporting these modes), some vendors offer the ability to allow to edit on files that are currently being ingested or captured. Essentially, that means the system is able to use (usually front) portions of a file which is still growing on the file-system due to incoming data being appended on the file's tail.EtherDrive

is a brand name of The Brantley Coile Company formerly Coraid, for storage area network devices based upon the ATA over Ethernet.Ethernet

Ethernet is a set of networking technologies, standards, and protocols applied together to provide stable, fast, and secure wired data transmission in a local area network (LAN) and metropolitan area networks (MAN). It is defined and described by the Institute of Electrical and Electronics Engineers (IEEE) standard 802.3 and operates in physical and data link layers of the Open Systems Interconnection (OSI) model. Nodes in Ethernet networks use twisted-pair copper and fiber optic cables as medium.Erasure Coding

an erasure code, in coding theory, is a "forward error correction" (FEC) code. In storage, the popular Reed-Solomon (RS) group of codes is used to form smaller derrivative data that represents the larger actual data, instead of full redundancy, as erasure codes can be used to rebuild the original actual data from its derivates. Today (as of 2024) the practice of using erasure codes ("erasure coding") is an important building block of large scale computer data storage and RS codes are built into many software storage implementations, like Linux' RAID 6 or Apache Hadoop's HDFS.Error correction code (ECC)

also "error-detecting code", is one measure in "forward error correction" (FEC), a technique often used in any area where (digital) data is stored or transmitted to detect errors ("error control"). The basic idea is that added data, as redundant data, is added or calculated to know of errors and be able to correct them on-the-fly, to a certain degree.eSATA

is a standard for external connectivity of SATA devices via eSATA ports and eSATA connectors. It was formalized in 2004. eSATA is not to be confused with "SATAe" (short for "SATA Express"). "eSATAp" describes powered eSATA, aka "Power over eSATA".ESDI

is short for "Enhanced Small Disk Interface" and an obsolete successor of the ST-412/506, and predecessor to SCSI.ETL Process

ETL is short for "Extract, Transform, Load", a term from the field of database theory, data mining and data wrangling, describing the process of gathering data from multiple, potentially unstructured or disparately structured data sources and combining them into one unified database.exFAT

Short for "Extensible File Allocation Table" is a Microsoft file-system from the FAT family of file-systems and is optimized for flash memory, thus often used on USB sticks and the like.EXR (Extended Dynamic Range)

Colloquial abbreviation of the OpenEXR image format, a high-dynamic range, multi-channel raster file format developed by Industrial Light & Magic (ILM) for professional film production pipelines, with beginnings in 1999 and a later release under a free software license.Extent

In regards to file-systems, an Extent is an extension or update of the traditional "block mapping" data storage scheme. While data in "block mapping" is stored as a number of individual blocks possibly spread randomly across the available storage surface, an Extent is a continuous range of usually neighbored blocks. In comparison with individual blocks, which are addressed and accessed via their individual numbers, the access addressing of an Extent can be stored as a number range. As an example, it is more efficient to store "blocks 3 .. 9" than enumerating "blocks 3,4,5,6,7,8,9". Extent based file-systems usually employ means to mitigate fragmentation, like "Copy-on-Write" (CoW) or "Allocate-on-Flush" (AoF). Btrfs is an example of an Extent-based copy-on-write (COW) file system, Linux' Ext4 file-system can be configured to use Extents.Extended file attributes

Also brief "Extended attributes" or "xattr", "xattribs" on Unix-like operating systems, is a feature of some file systems where a file can be associated with additional metadata that is not strictly required by file-system in POSIX like operation. Extended attributes commonly are organized in user-definable key-value pairs. Their size (data payload storable in an xattr) is usually very limited in comparison to the possible size of the related file, but with common sizes around 255 bytes and 4KiB usually large enough to fit textual metadata. The actual size limitation depends on the type of used file-system. Extended attributes are commonly used to store more elaborate file access rules, like a per-file access-control list (ACL) or Dublin Core syntax to describe resources like Image or Video files. While xattribs would be perfectly capable of storing the "file coloring" found in some file managers an in Apple products, this color metadata is mostly stored in a separate database or in sidecar files, contributing to the problem of separating metadata descriptors from the described resource. Apple file system usually store extended attributes and file metadata in so called resource forks, separate of a files payload data.FAT

The File Allocation Table (FAT) is an older file system developed by Microsoft initially for storing and retrieving data on small-size media, such as floppy disks. Over time, it evolved and became used on hard disks. Data in this file system is organized in a tree-like hierarchical structure within directories. Files are stored on the media in collections of data blocks called clusters, with each cluster having a size of 4 kilobytes in the last version of FAT (FAT32). Among limitations of the FAT32 file system are limitations related to the maximum file and volume size, as FAT32 cannot store files larger than 4 gigabytes, and the volume size is 2 terabytes maximum. Today, FAT32 continues to find use in USB flash drives and memory cards. One newer iteration of the FAT file-system family is exFAT, optimized for flash memory.Fibre channel (FC)

High-speed data transfer protocol providing in-order, lossless delivery of raw block data. As such it is an elemental technology in SAN and Block Storage. Fibre Channel uses its own network interconnection model, defining layers similar to the OSI or TCP/IP models.- FC-4 (Protocol Mapping Layer: application protocols, for example encapsulated SCSI)

- FC-3 (Common Services Layer: optional services, like RAID coordination or encryption)

- FC-2 (Network Layer: FC-P's core)

- FC-1 (Data Link Layer: on the wire coding, etc.)

- FC-0 (Physical Layer: lowest layer, cables, connectors

Fibre Channel over IP (FCIP)

Also "FC/IP", is a technology to transport Fibre Channel communication over an IP connection ("Fibre Channel tunneling", also "storage tunneling"), commonly used to send FC data over distances normally not possible with native FC. FCIP is not "Internet Fibre Channel Protocol" (iFCP).Fibre Channel over Ethernet (FCoE)

encapsulates FC frames for transport over Ethernet networks. This is commonly used to enable deployments to use Fibre Channel on an Ethernet infrastructure (usually 10 Gigabit (or more) Ethernet networks).File Level storage (vs. block level storage)

File-level storage, also known as file-based storage, is a data storage method that deploys a hierarchical architecture for storing and organizing unstructured data through files and directories. In the context of file-level access protocols, it uses a Network File System (NFS) for Linux and Unix-based operating systems and the Common Internet File System (CIFS)/Server Message Block (SMB) for Windows. When it comes to scalability, file-based storage is able to scale up (expanding the file storage capacity by adding more storage resources to a single node) and scale out (increasing the performance and capacity simultaneously by adding more file storage nodes). Compared to block-level storage, file-level storage is cheaper, simpler, and easier to manage, but block-level storage is the preferred choice for performance-critical applications due to its direct access to raw storage blocks. File-level storage is commonly deployed in network-attached storage (NAS) and storage area networks (SAN) - if configured with file-level access protocols.File System

A file system is a collection of methods and structures designed for operating systems and various software applications to name, store, access, retrieve, and organize data on storage devices. This is (traditionally) achieved through a hierarchical arrangement of files into directories and subdirectories. Some file-systems use a non-hierarchical approach (e.g. Semantic File Systems), but are either niche or offer non-hierarchical access mechanism on top of traditional hierarchical structures. Additional responsibilities of file-systems include access control, metadata management, encryption, directory structure maintenance, monitoring available free space, enforcement of set per-user quotas, optimizing performance and ensuring data integrity.File Storage

a computer "file" is a kind of resource that computers and storage systems use to organize data in, usually addressing this data by a "filename". Historically, this is one of many desktop, office and work-environment methaphors found in computer systems and information retrieval. When computers were in their infancy, data used to be "written" (punched) on punchcards, and these cards were commonly organized in file (or filing) cabinets, just like traditional paper files in any office. When data started to be written onto magnetic storage media, the term stuck and coherent data units were still refered to as being "files" and the storage layouts they were stored in, either linearly or hierarchically, were then labeled "file systems". Today, when we speak of "file storage", it is usually to distinguish this traditional perspective on data storage with other techniques or variants of data storage or its organization, for example to contrast "file storage" against low-level "block storage", or against schemes where data isn't accessed via its filename but instead by its contents, as in some "object storage" systems.Fileserver

A file server is a computer that acts as a centralized storage, providing data sharing across an organization or network. Its primary responsibility is storing and managing data, enabling other computer clients on the same network to access them. In a file server setup, users interact with a central storage, which serves as a platform for storing, retrieving and managing internal data. The benefits of utilizing a file server include remote access, centralized management, enhanced security, backup capabilities, data recovery options and user control. Private file servers can only be accessed through an organizations's intranet or through virtual private network (VPN), just a well might file servers be accessible to the public, via FTP or the Internet.Flash file system

a specific, or a category of, file system where data access is organized in such a way that it is optimized for flash memory devices. One example of optimization is specific strategies to mitigate "wear" (wear levelling), as flash memory is usually only allows a limited number of erase cycles before becoming unreliable.Floppy disk

A floppy disk is a legacy removable, flexible (thus "floppy"), magnetic storage medium enclosed in a plastic envelope. A magnetic head was used in a stepper-motor driven reading and writing mechanism. On disk, the head wrote usually 40-80 tracks, divided into data sectors. Early floppy disks were 8-inch disks. Floppies garnered wide popularity in a sized down version during the 1980s, with a smaller sleeve measuring 5.25 inches. The flexible plastic envelope was changed to a more sturdy hard-plastic shell when disks grew in capacity to a common 1.44 megabytes on a once again down-sized disk of 3.5 inches during the 1990s. A "floppy disk drive" (FDD) is an obsolete hardware device designed for reading data from and writing data to floppy disks. Built into external enclosures, an FDD was one common peripheral component of early personal computer configurations. Internally, the drive mechanics usually had a power and data interface, with 4-pin power cable and a 34-pin box connector, matching a flat ribbon cable to an FDD controller.Micropolis was a major manufacturer of 5.25" form-factor Shugart interfaced Floppy Disk Drives during the 1980s and evolved into manufacturing "rigid" disk, hard disk drives from this expertise in the 1990s.

FTP

FTP is the abbreviation of "File Transfer Protocol" and describes a network protocol designed for file transmission between computers using a Transmission Control Protocol/Internet Protocol (TCP/IP) connection, where one computer acts as a server and another as client. It resides in the seventh layer of the open system interconnection (OSI) model - called the application layer. Also, there are secure alternatives like File Transfer Protocol Secure (FTPS) or Secure File Transfer Protocol (SFTP, sometimes "SSH File Transfer Protocol", as it operates over an SSH connection), where both commands and data transmission are encrypted in order to overcome the unencrypted nature of traditional FTP.File virtualization

In file area network (FAN) and network file management (NFM) contexts, a virtualized file is a client representation of a computer file that preserves the traditional file access semantics of paths and hierarchies while at the same time separating the presented file from the actual underlying storage environment, by inserting an abstraction layer between the client and file server, NAS or storage technology.Filesystem in Userspace (FUSE)

FUSE (derived from Filesystem in Userspace) is a framework designed to provide an interface for userspace programs, allowing them to export a file system to the operating system kernel. It consists of a kernel module, a userspace library (libfuse), and a mount utility (fusermount). The most important capability lies in enabling secure, non-privileged mounts of filesystems on a per-user basis. FUSE has been adapted for deployment on Solaris and Unix-like operating systems, including Linux, macOS, FreeBSD, and NetBSD.GIO

("Gnome Input/Output") is a library, designed to present programmers with a modern and usable interface to Linux' virtual file system.Global file systems

a category of clustered, distributed file systems that appear as one uniform file-system hierarchy ("global namespace") to clients.Gopher

Early Internet Protocol, related to FTP and a predecessor of the World Wide Web (WWW). User could search, browse, access and distribute their own documents documents across a global namespace resembling a local file-system hierarchy, the "gopher space".GPFS

Abandoned name for IBM's GPFS (General Parallel File System) now brand-named as "IBM Storage Scale" and previously "IBM Spectrum Scale". A high-performance clustered file system software developed by IBM.Grid file systems

is a broad term for a category of clustered, distributed file systems, meaning data is spread over a "grid" (instead of a single disk or single storage location) to improve reliability and availability and at the same time appearing as a single uniform resource to client systems.GVfs

found in Linux operating systems and usually the abbreviation for "GNOME virtual file system"). GVfs is GNOME's userspace virtual filesystem (VFS) designed to work with the I/O abstraction of GIO. Wikipedia.GSS (GPFS Storage Server)

An IBM product, a modularized storage system. GSS 2.0 is based on an IBM System x x3650 M4 server running General Parallel File System (GPFS) 4.1 on NetApp disk enclosures. As of 2024, version 2.0 is now part of the IBM Elastic Storage Server family.Hadoop (HDFS)

Apache Hadoop is a general framework that allows for the distributed processing of large data sets. One component is the Hadoop file system (HDFS). Official websiteHalf-rack format

Comparable to 19-inch rack frame enclosures, a half-rack, 10-inch, or 9.5" rack only uses half the width of a standard 19" rack, thus offering a modular mounting option on a smaller footprint.HAMR drive

Short for "Heat-assisted magnetic recording", pronounced "hammer", is a technology in magnetic hard disk drives where heat is used to lower the magnetic coercivity of magnetic disk coatings in order to allow the read/write-head to magnetize particular domains on the rotating drive platter. Technology used to heat the drive's platter surface ranges from Microwaves (MAMR) to laser and surface plasmons. The used heating technology usually treats a domain immediately before the read/write-head moves over, a process in the region of under one nanosecond. The material is heated, written and then cools down by its own. As Corercivity quickly raises right after data is ingrained, the magnetization appears as being "baked" into the magnetic surface.

Our Micropolis knowledge database has a more detailed article on HAMR Disk Drives.

HBA (Host Bus Adapter)

Piece of computer hardware, also called host controller or host adapter. While host adapters can as well be devices to connect USB or FireWire, the term HBA is mostly used when refering to SCSI, SATA, SAS, NVMe or Fibre Channel - newer transferring mechanisms, as host adapters were a common form of connecting early SCSI drives. While early models implemented basic I/O for the host system, later models added hardware fault tolerance schemes like RAID. Host Bus Adapter In Fibre Channel context the term used is usually High Bandwidth Adapter (HBA). InfiniBand controllers are commonly called host channel adapter (HCA).HDD

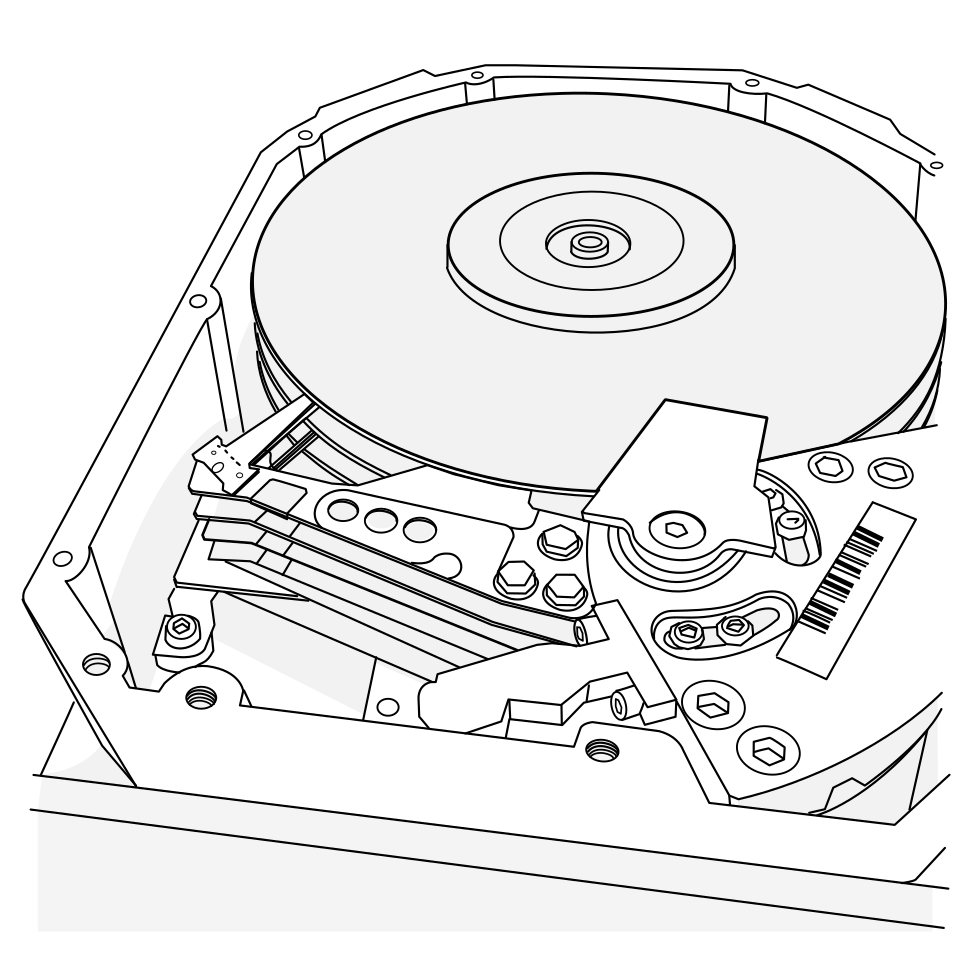

A hard disk drive (HDD), commonly hard disk or hard drive is a computer storage device that uses electro-mechanical means to store digital data on a rotating magnetizable disk, the hard disk. As the platters in a HDD are made of metal with a surface coating, they were initially also called fixed disk drives or rigid disk drives. In a Hard Disk Drive, a rotating platter similar to a audio record player is used to write and read back digital data. A stylus or read/write-head is moved over the surface of the rotating media to write concentric tracks of serial data. In contrast to an audio turntable, the information is not written in one long spiral form but on parallel concentric tracks, also called cylinders. Either embedded information in a track or positioning information along a track is used to determine the rotational position of the read-write head on one of these tracks. Each track this way is separated in data areas called sectors. The rigid disk turns at a defined speed and fast actuators move the read/write-head on an arm between tracks to access data in a near-random fashion. While the disk is made from metal, the actual surface is coated with a special magnetizable coating. The read/write-head used a magnetic force to magnetize regions, called domains, in a predefined way. The magnetic force exerted by the read/write-head has to be high enough to be able to overcome the Coercivity of the used coating. As of the 2020 years, data density in hard disk drives has become very high, requiring magnetic coating with a very high Coercivity. If Coercivity wasn't so high, individual domains would "bleed" into each other, blurring the boundaries between magnetized areas. This led to read/write heads being developed that heat a particular region on the disk surface to allow material be magnetized at all (as found in HAMR and MAMR HD Drives). Once the surface has cooled down again, magnetic data appears to be "baked into" the magnetic surface.Micropolis was a major hard-drive manufacturer during the 1990s. As an early manufacturer of Floppy Disk Drives, the company used controller expertise and IP to offer early Hard Disk Drives - essentially floppy disk drives with a rigid disk. Prior to the market's swing to smaller HDD form-factors, Micropolis was offering the highest capacity 5.25" HDD with its model 1991 disk drive.

Hierarchical file system

One and the most common form of computer file systems. Data objects are regarded as files and get stored in collections (of items), also called folders (of files) or directories (with entries). The sum of all files present in a file system this way resembles a hierarchy of directories and files, a directory-tree. This structure can be traversed by the user via command-line tools and graphical file-managers, file-browsers. One file mostly has one single path graph representing its "location" within the file hierarchy and thus "on disk". Some filesystems implement more data object categories (special files), hard-links, soft-links, junction-points, symbolic links, sockets, fifos, etc. Hierarchical file systems are just one form. Older media (punched cards) or tape media used linear file systems. Newer approaches, in parts breaking with common usage patterns, are tag based file systems (semantic file systems, associative file systems).High availability (HA)

Availability is a characteristic of computer systems. It measures a level of operational performance, in regards to general availability, performance or simple uptime. In case a system is aiming at or defined for a higher than normal availability, it is commonly refered to High Availability (HA). As availability of a system is usually measured in percentages ending with multiple "nines", it is common to speak of the "number of nines" in enterprise computing, to describe capabilities of mainframes or similar IT or an agreed upon minimum availability defined in Service Level Agreements (SLA). The term "always on" and Resilience are related. Resilience describes a system's tolerance against faults and challenges during normal operation.Hot plug

The process of "hot plugging" means removal and/or insertion of a system-option during normal operation of the host system. For example, if a storage array is exhibiting the trait of offering "hot pluggable SSDs", it means individual disks may be pulled out during normal operation, for example as part of maintenance or to recover from a device's failure - and the host system will continue running uninterruptedly . Hot plug is a feature on the device-level, the hardware-interface level, but must just as well be supported by the storage controller and the running storage software.Hybrid archiving

describes the practice of keeping an analog/original version of some archival matter and a digital/digitized copy of it. For example, newspaper pages have been photographically preserved in microform format on microfilm - but at the same time a digital copy, like a scan or digital photographic reproduction of either the original newspaper or its microfilm representation is kept on file for long time preservation.hybrid cloud

a "hybrid cloud" describes an IT infrastructure that is composed of on-premise, local resources and remote off-site "cloud" resoures. For the specific use-case in data storage, described as "hybrid cloud storage", the term refers to a storage infrastructure that combines on-premises storage resources with remote resources, usually in the form of resources offered by a public cloud storage provider.HyperSCSI

is a failed attempt to operate native SCSI protocol commands over Ethernet, similar to Fibre Channel. It bypassed and skipped a number of elements of the TCP/IP stack to lower overhead and perform more like a native SAN protocol. It was replaced by iSCSI and Fibre Channel.Interconnection models

in computer networking data networks can be described as having a specific layer architecture, where each layer represents one level of abstraction. The lowest level is usually the physical layer, the cable layer, while a higher level describes what actually travels over the wire. A number of so called interconnection "reference models" have been defined and standardized. The ISO/OSI model (short for "Open Systems Interconnection" model)" is one, the TCP/IP model ("Transmission Control Protocol/ Internet Protocol") is another well known reference model. But there are more, like the TCP/IP predecessor DoD model (developed by the United States Department of Defense, "DoD") or the Fibre Channel ("FC") model of layer abstraction.Aligned comparison of OSI, TCP/IP and Fibre Channel interconnection models

| OSI Layer | OSI Name | TCP/IP | Fibre Channel |

|---|---|---|---|

| 5-7 | Application | HTTP, Telnet, FTP, SCSI-3 over TCP/IP (iSCSI) | IP, SCSI-3 FC-P |

| 4 | Transport | TCP, UDP, SCTP, TLS | FC-4 |

| 3 | Network | IP (IPv4, IPv6)), ICMP, IGMP | FC-3 |

| 2 | Data Link | Ethernet, Token Ring, Token Bus, FDDI | FC-1, FC-2 |

| 1 | Physical | media | FC-0 |

IPMI

short for "Intelligent Platform Management Interface (IPMI)" is a suite of interfaces used to remotely monitor a host system. IPMI is an out-of-band (meaning a separate system) administration tool. In IPMI a "baseboard management controller" (BMC) is a complete but separate system attached to a host, a microcontroller or low-spec computer system, that acts as a controlling instance of the monitored host (especially servers), offers communication, own serial and network ports, etc.ISV Certified

"ISV" is the abbreviation of "Independent Software Vendor", a broad term to describe the sum of software vendors who are "independent" of the currently dominating vendors of software on the market. As such, basically the majority of the software mnarket vendors are ISVs. The term is commonly used in conjunction with hardware vendors, who build or manufacture systems that were then "certified" by ISV to be compatible and performing to a certain spec when exectuing specific software, usually in specific markets, for example for CAD applications, for medical work, high performance video editing, etc. ISV Certified is not some form of official or independently issued certificate, but part of programmes certain vendors implement according to proprietary terms. It is common to have high performance deskside workstation PCs, or rack mount workstations, as used in A/V editing or 3D visualization studios, be ISV certified.IOPS

IOPS is an abbreviation for "Input/Output Operations Per Second" and represents a critical metric for storage system performance. It provides information on how many input and output operations a storage device can handle per second. IOPS serves as an indicator of storage efficiency and responsiveness, especially in situations where high operation rates are crucial - like in virtualization environments, video streaming, computer gaming, cloud computing, etc. As a performance metric, IOPS is usually measured in "total IOPS", "Read IOPS" and "Write IOPS".iSCSI

"Internet Small Computer Systems Interface" is a method to transport native SCSI commands over TCP/IP network infrastructure, more specifically iSCSI is SCSI-3 over TCP/IP. iSCSI is Ethernet based while Fibre Channel, in comparison, uses its own interconnection model architecture.IMF ("Interoperable Master Format")

The IMF (Interoperable Master Format) is a standardized and interchangeable master file format developed by the Society of Motion Picture and Television Engineers (SMPTE) to address the entire process of creating, managing, storing, and delivering professional video and audio content. It is usually used in professional A/V workflows to deliver finished works, offering support for multiple language streams, subtitles/captions, etc. ST 2067 is a standard published by SMPTE to provide a comprehensive set of specifications for the inter-operable master format, enabling the creation, localization, distribution, and storage of master video and audio content on various platforms. IMF stems from other file-based professional audio/video formats like MXF.iWARP

in a clear play on the hyperspace speed metric used in the Star Trek feature-film and TV franchise, iWARP (not an acronym for anything) is a computer networking protocol used in "remote direct memory access" (RDMA) to transport RDMA traffic over TCP/IP networking infrastructure. One implementer is Chelsio.JBOD (Just a Bunch Of Disks)

is a term to differentiate a number of independently operated disks, an Array of Independent Disks, where no means to achieve data redundancy are used. As such, it is not a RAID system. JBODs are usually disks in a single enclosure. JBODs can be used to support or amend existing RAID systems. A Volume Management Software can be used to connect multiple volumes as one single logical volume, or individual volumes from a JBOD may be separated into logical volumes that are in turn used to assemble, concatenate or extend other volumes. Storage volumes operated in a computer without the volumes acting as fault mitigating volumes can be described as being a JBOD.Journaling file system

A type of file-system that offers atomicity and durability by keeping a log of (at least) metadata changes / transactions, by employing a tracking file called a journal, which serves as a transaction log. In case of an unexpected shutdown or system failure, the operating system will use the journal during the reboot process to repair any inconsistencies in file indexes. Log mechanisms are usually implemented as a "write-ahead log" (WAL). Journaling is used to reliably recover file-system integrity after unexpected interruptions, like a power loss or hardware failure.LAN (Local Area Network)

A computer network that can be described as being smaller than Metropolitan or Wide Area Networks, but being larger than Personal Area Networks ad-hoc networks. LAN usually refers to the type of physical wired networking infrastructure found in home or company networks. Hubs, Routers and Switches are used to build the network topology. On the wire the Ethernet protocol is used, with speeds between 1 and 100Mbit/s. Faster networking speeds usually don't use copper but optical fiber (e.g. 100-Gigabit-Ethernet). The wireless variant of a LAN is typically called a WiFi network, WLAN or Wireless Network.Latency

In a data storage context, latency measures the time delay between initiating a request and completing the corresponding input-output operation. It is an important performance metric, commonly expressed in milliseconds, providing a value to compare a storage's overall efficiency and effectiveness. Reducing latency enhances performance and can be achieved by optimizing storage architectures, employing caching mechanisms, and using high-performance interfaces.LDAP

LDAP, short for "Lightweight Directory Access Protocol", is a standard application protocol developed to store and provide access to network resources, including users and devices. It serves as a centralized directory service commonly employed for authentication, authorization, and information or device lookup within a network. Often used in "Single Sign On" features or organizations wide authentication, the advantages of the LDAP protocol are usually reflected in enhancing overall security, simplifying user management and providing human clients with easier access to network resources.Local Block Storage

Traditionally, in non-virtualized environments, local block storage refers to physical memory-based (e.g. SSDs) or Hard Disk Drives directly connected to a host system. In a tiered perspective on storage, this is usually the fastest (bandwidth, latency) storage available on a system after RAM and other memory based systems. In virtualized environments, in enterprise IT, datacenters, web hosting or with cloud service providers, it describes virtualized storage volumes that can be treated like physical storage devices, can be formatted with any given file-system, partitioned, mounted, etc. More colloquial, for example in web hosting product descriptions, "local block storage" (meaning virtualized storage), refers to optional storage that can be attached quickly and on-demand to (virtual) servers.Log-structured file system

is a type of file-system that writes data and metadata sequentially to a circular log (or "circular buffer"). John K. Ousterhout and Fred Douglis proposed this layout in 1988 under the assumption that future storage systems would be less reliant on relatively slow seeks and instead rely on memory cache for reads and making writes the bottleneck. This has since been proven as true in parts yet brought a number of other drawbacks.LTFS (Linear Tape File System)

a file-system designed for use on tape media, allowing clients to access files on linear tape similar as to file on random access media. Originally developed by IBM, it has become an open standard (ISO/IEC 20919:2021) and was moved to be managed by the LTO Consortium in 2010.LTO

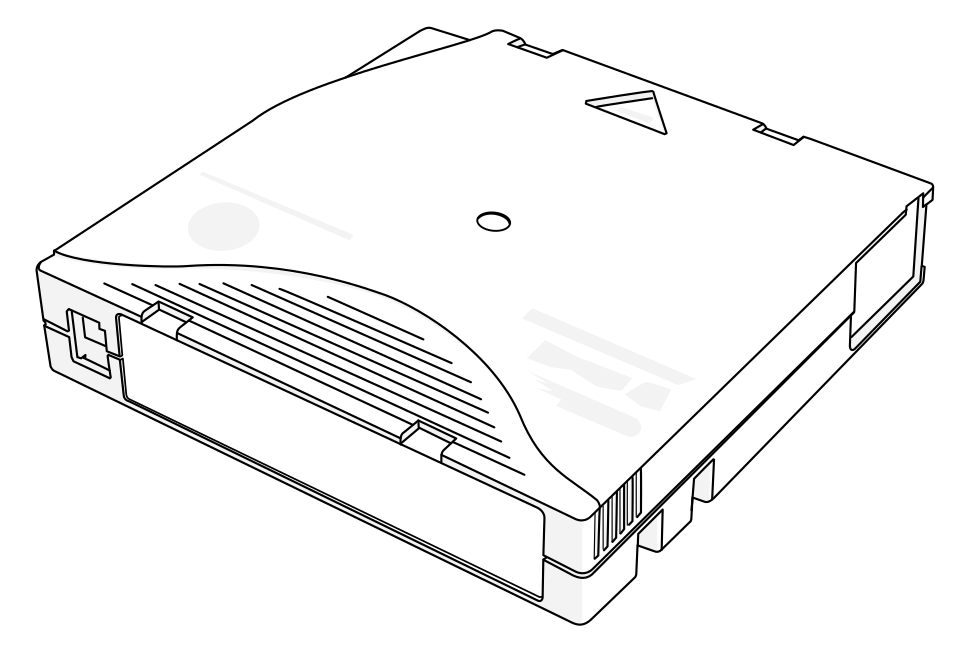

"LTO", an abbreviation of "Linear Tape Open", is a specification for half-inch magnetic tape, its cartridges and matching tape drives. LTO-1 was introduced in 2000 and was able to store 100GB of data. Since then, the LTO format, in various revisions (LTO-2, LTO-3, etc.), has been dominating the market of so called "super tape" formats, data storage tapes of very high capacity. Since version LTO-5, data on LTO tapes is usually stored using the "Linear Tape File System" (LTFS).

Lustre

Lustre (the word being a combination of "Linux" and "Cluster") is a parallel distributed file system popular in large-scale cluster computing and High Performance Computing (HPC).LUKS

is an abbreviation of "Linux Unified Key Setup" and is a platform-independent standard for disk encryption, using a standardized on-disk format to facilitate compatibility among distributions, enable secure management of multiple user passwords. It originated on Linux and has since its inception found adoption on more platforms.M.2 (NGFF)

also known by the older name "Next Generation Form Factor" (NGFF), is a specification for computer expansion cards and associated connectors and evolved from the SATA standard. It is a replacement for the slightly older mSATA standard and caters specifically to solid-state storage applications and applications where physical space is limited. M.2 can't be hot-swapped and operates strictly at 3.3Volts.Massive array of idle drives (MAID)

Assemblies for or in general the architecture of using hundreds or thousands of hard disk drives which are mainly spun down. MAIDs are one form of "cold storage" and are used to facilitate "nearline storage" of data. MAIDs (massive array of idle drives) are an answer to data that is "Write Once, Read Occasionally" (WORO).MAM (Media Asset Management)

alternative term for "Digital Asset Management" (DAM). MAM software suites usually focus on enterprise-wide management of assets, to help organizations with the challenge of centrally hosting and managing a multitude of digital assets. This can be advertising material or corporate visuals, logos or branded graphics, sound bits, videos, material distributed to customers etc. MAMs usally offer some kind of approval systems, so new assets can be (peer) reviewed by entitled personnel before more general users of the MAM can use these assets in their daily work. The broadcast, television and movie industries are related markets for MAMs, but in this sector, Asset management Systems are usually tailored to the specific needs of creative, post-production or production workflows. In this field, MAMs are sometimes refered to as "Production Asset Management" (PAM) systems, offering "Bin Locking", "Edit while Ingest".MAMR

"MAMR" is short for "Microwave Assisted Magnetic Recording" one type of "HAMR" hard disk drive for very high density magnetic storage.Managed Service Provider (MSP)

is a broad term describing IT solution providers who offer not only building-blocks of IT (here "computer cloud services"), but also offer the professional operation of such technology (actively managed) as their product. A basic example is managed web hosting, where root server products are sold to customers, but DevOps and regular chores, e.g. updates, are handled by the vendor, usually under some Service Level Agreement (SLA). Cloud Managed Services Provider (CMSP) is a term for mixed solution providers that offer to operate basic cloud services for customers as their service. Some Service providers focus on operating IT from a single vendor (pure play MSPs) while others mix and match technology from multiple vendors to fulfill customer requirements (multi vendor MSPs).What is a Media Defect Table?

Older drives, like the Micropolis 1325, have a table sheet printed on one side of the enclosure. It says "Media Defect Table" and lists various numbers, cylinders, etc. This table denotes areas on the physical magnetizable surface of the drive's internal platters that an automated test had earlier discovered as faulty after production at factory. The drive will not be able to store data in these areas. Thus, the end user is required to manually enter the areas listed in this defect table into their storage driver, so the system using the drive will not try to store data at these locations.

Source: Our article "What is a Media Defect Table" from the Micropolis support knowledge database.

Metadata

Metadata generally means an information set used to describe other data, thus offering contextual insights into specific data. It provides information such as the origin of data, date of creation or modification, location, ownership, size, purpose, etc. There are 6 distinctive types of metadata: structural, descriptive, preservational, administrative, provenance and definitional. Metadata simplifies search and retrieval processes. Metadata helps organize and categorize data, making data easier to manage and maintain.Metadata controller

in clustered or distributes file systems, a "metadata controller" (or "metadata server", "director", etc.) is a system or daemon acting as a director for all requests asking for metadata about a file within the file-system or the allocation/retrieval layer of (global) file-systems. Its implementation and exact definition of what a Metadata Controller does depends highly on the implementing file-system or data storage system. Some file systems separate metadata from data payload requests to split actual data retrieval for theses tasks between separate systems for performance reasons. A metadata directing layer may sit between client and data and may impose a complication or bottleneck for performance, thus many implementations sideline the actual read of payload data from metadata operations. Sometimes the separation of such elements is configurable, sometimes storage solutions separate data payload and metadata by design. With distributed or clustered file-systems, a metadata controller usually also fulfills storage allocation duties (write) and aids in fulfilling read requests by directing a reading instance to where data, on which machine or in which physical location, is actually stored. Examples of metadata controllers are Quantum's StoreNext file-system component "Metadata Controller System" (MDC system) as part of its optional separation of split payload data, metadata and journaling; or CephFS's "Metadata Server" (MDS) which keeps metadata about files in CephFS and also caches hot metadata requests, manages spare metadata service instances, synchronizes distributed node caches and writes the file-system's log journal; or BeeGFS's "metadata node" server, with similar tasks and, e.g. stores metadata not in a custom database but in the extended file attributes of locally stored zero-size Ext4 shadow copies of files on the file-system.MFM Drive Interface

Being a modification of the original frequency modulation encoding ("FM") code used in early magnetic storage, Modified frequency modulation (MFM) is - as the name implies - an improved variant of FM, a run-length limited (RLL) line code usually achieving double the information density of FM as it put clock and data into the same "clock window". MFM was used on some hard disk drives and in most floppy disk formats.

the Micropolis support knowledge database has a more detailed article about "MFM drives and common interfaces.

Microform